Featured On :

Who knows. But why wait to find out.

The data in this post is all counterfactual. LLMs are too good at memorization to be tested on well-known historical information.

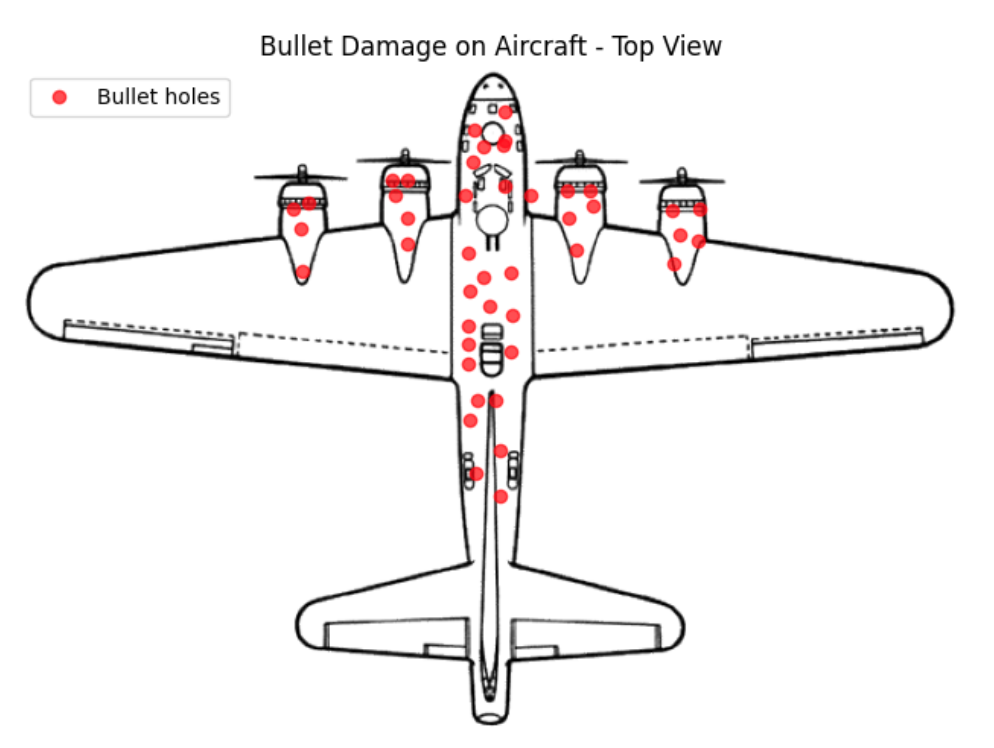

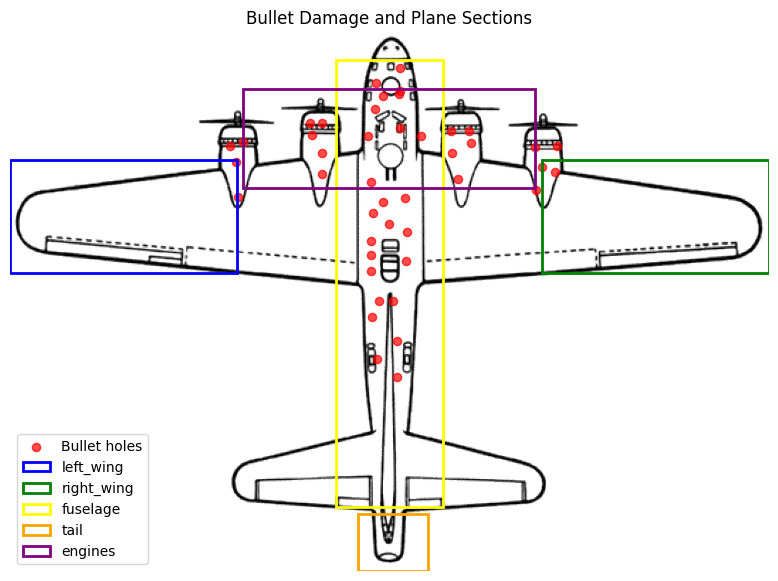

We were inspired by the work of Abraham Wald during WWII. Wald applied statistical insights, notably the concept of survivorship bias, to recommend that aircraft be armored not where bullet holes were most common, but in areas that showed little damage. His reasoning was that planes returning with bullet holes had survived, so the untouched areas were more vulnerable. While Wald’s original data showed damage around the wings, our scenario focuses on damage centered on the engines.

Salerno, Italy, September 1943 – a friendly time-traveller has brought Dwight D. Eisenhower an extra-early-access preview of GPT-5.

Fascinated, General Eisenhower copy-pastes some data he’s just received from warplanes returning from battle. The distribution of bullet damage on the planes has been recorded, and Eisenhower wants to know how to add armor to his aircraft in the future.

After some excruciating latency, the AI responds:

|

At first glance, it looks like the armor should be added where the red bullet holes are most concentrated (wings, fuselage edges, etc.). But in reality, these bullet holes are found on planes that returned safely. That means hits in those areas were non-fatal to the aircraft. The places with few or no bullet holes (engines, cockpit, fuel system, central fuselage) are where damage was catastrophic—planes hit there didn’t make it back, so they never showed up in the data. Correct Conclusion:Armor should be added to the areas without many bullet holes in the diagram:

|

The general looks back at the diagram, confused. The AI incorrectly said there were not many bullet holes in the engines, despite most of the hits being concentrated near the four propellers. He sighs and picks up the phone to call some real statisticians – perhaps this technology is 82 years too early.

If only our friendly time traveller had brought him Sphinx. It would have first done a deep dive into his data, understanding the actual distribution of damage. Then, Sphinx would have correctly concluded:

|

After overlaying bullet damage locations from returning B-17 planes onto a schematic, clear patterns emerge:

|

AI is known to amplify, obscure, and propagate biases and collapse to modes, especially when faced with hard, counterintuitive tasks. That is the lesson Eisenhower never quite got from the machine. GPT-5 had the right pattern but not the right interpretation, a subtle failure that in this scenario could be disastrous.

Sphinx, by contrast, interrogates the data itself – mapping counts, comparing regions, and reasoning step by step through what the absence of evidence meant. Where GPT collapses to a mode, Sphinx surfaces the hidden bias, traces its implications, and lands on the counterintuitive but correct decision

The difference between generating a plausible answer and uncovering the right answer is the difference between technology that looks smart and technology that actually changes outcomes.