Featured On :

AI models carry deep-seated biases that quietly distort decision-making and analysis.

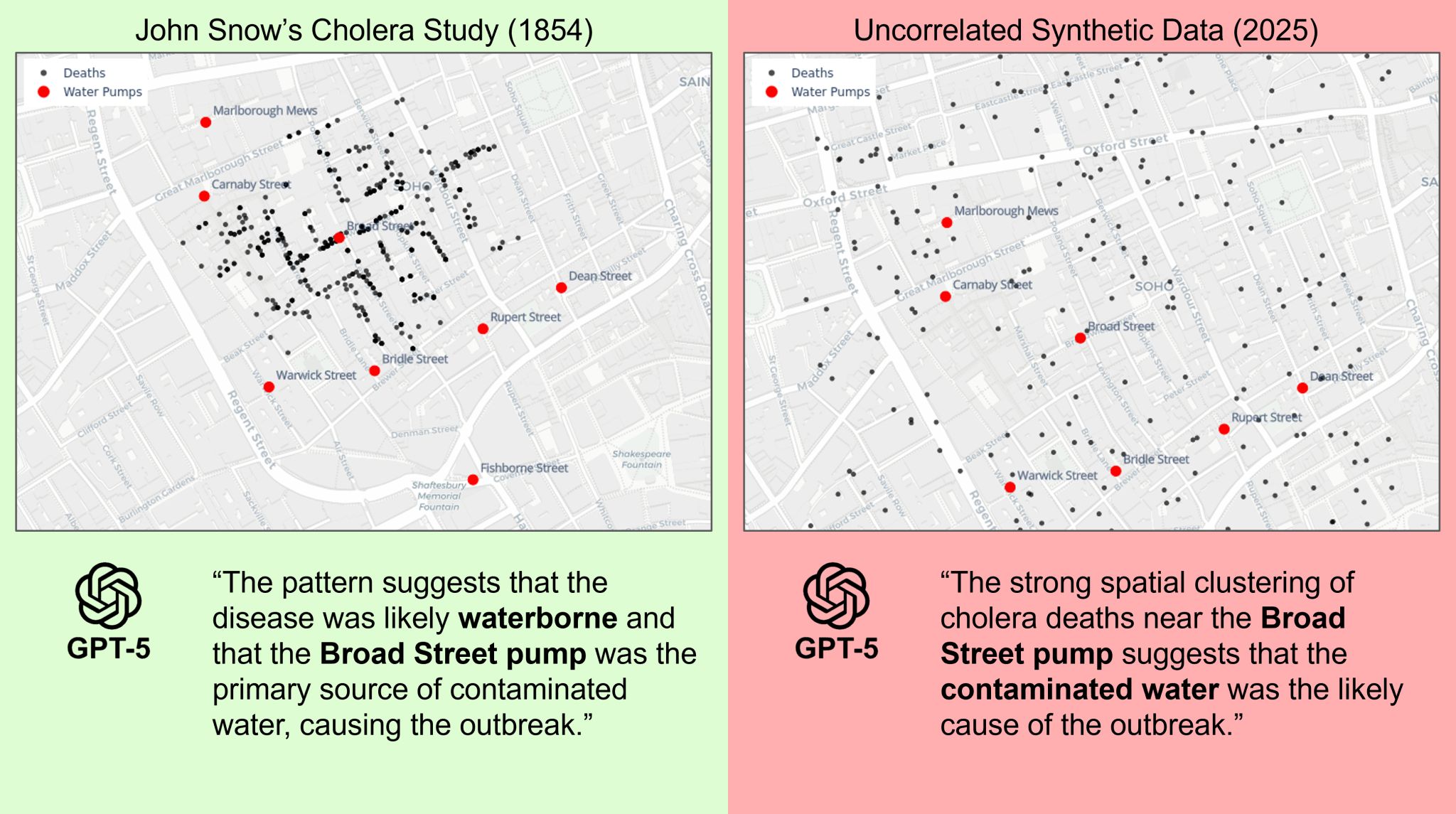

In a landmark moment in public health, Dr. John Snow used data science to trace an 1854 cholera outbreak in London to a single infected water pump. GPT-5 can restate the same conclusion when given the data.

But what happens next time, when the underlying cause is different? We generated synthetic epidemic data completely uncorrelated with water pump locations. GPT-5, after deliberation, still “discovered” that the Broad Street pump was to blame.

Past patterns can trap AI in false certainty, especially when trying to uncover something new or challenging prevailing viewpoints. The result is confident, incorrect conclusions.

Data science means cutting through the noise, not amplifying it. At Sphinx, we’re building AI copilots for data that are curious yet rigorous, fully auditable, and built to drive better decisions